- No Longer a Nincompoop

- Posts

- Stop Using ChatGPT

Stop Using ChatGPT

Welcome to the first addition of the No Longer A Nincompoop with Nofil newsletter of 2025.

Here’s the tea 🍵

Google’s AI Studio website is all you need

Gemini Flash 2.0 🤏

How to use Gemini Pro 2.0 Experimental 🔬

Google’s o3 competitor 🤔

New Chinese AI model - of course it’s open source ⚡

NVIDIA’s personal AI supercomputer 🧑💻

AI will change education 📚

I had a wonderful break with friends and family and hope you did too. Now that I’m back to writing, I’m a bit confused as to where to begin…

I don’t know how it’s taken me this long to write about what Google has been doing, but, we’re finally here.

So, let’s talk about it.

Side Piece:

I’ll be building a few mobile apps this year and have built an app that let’s you gamify healthy habits like going to the gym and sleeping on time.

You get points for being healthy and compete with friends and family to see who’s the healthiest.

You can check out a little video demo of the app on my instagram [Link].

And, if you’re wondering - yes, it is entirely written by AI. Roughly ~25 hours of work, so about 3 working days.

I’ve never coded an iOS app before this.

You can build anything with AI 🙂.

Google’s Supremacy

For context:

Gemini Flash: Smaller model, designed for faster, less intensive tasks.

Gemini Pro: Larger, frontier model. This is their flagship model.

Google’s announced their new flagship Gemini Flash 2.0 model.

It is a phenomenal model, especially in terms of speed, quality and cost. It outperforms Gemini Pro 1.5 significantly.

Some of the data is very surprising.

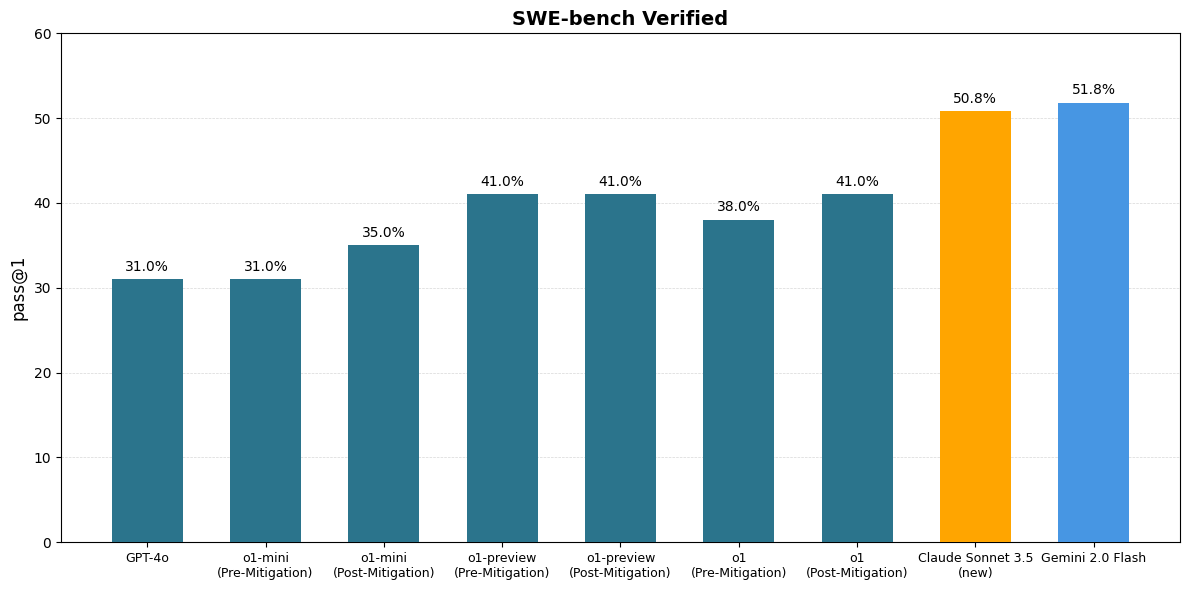

On the SWE-bench verified test, Flash 2.0 somehow not only beats every single iteration of OpenAI’s o1 models, it also beats GPT-4o and Claude 3.5 Sonnet.

This isn’t just a good model on tests either.

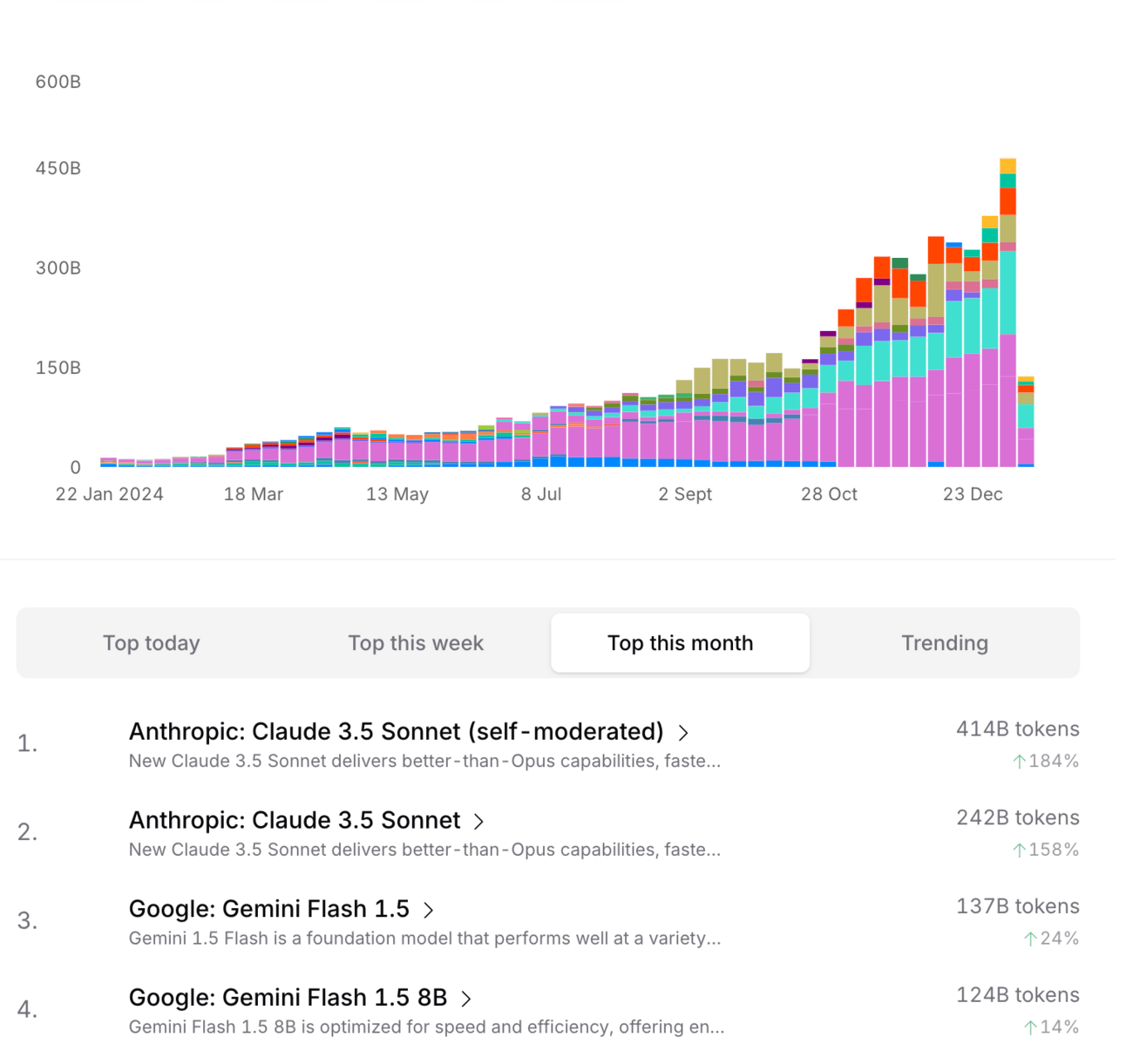

It’s predecessor, Gemini Flash 1.5, is one of the most used models on the planet.

Mind you, this is the older version.

Gemini 2.0 Flash comes with a 128k context window, but, without a doubt the most impressive thing about it is its multimodality.

The model’s native video and audio processing is something to behold. Seriously, you have to try it.

Go to the Google AI Studio website. Select the “Stream Realtime” option on the left and you’ll have three options.

Talk with the model with only audio

Let the model see through your webcam or camera and have a chat about what you’re looking at

Share your screen with the model so it can see what you’re working on

It is very impressive (and kind of creepy) how natural it feels. I imagine Google will integrate this type of agent throughout their infrastructure.

It’s the perfect “study buddy” or “work assistant“.

Preview of Gemini Pro 2.0

You can actually try Gemini Pro 2.0 on the AI Studio website as well.

Although it hasn’t been announced officially as the next frontier model, this model is seriously good. I found it to be better than GPT-4o and, in some cases, on par and exceeding Claude 3.5 Sonnet.

The vibes are very good so far.

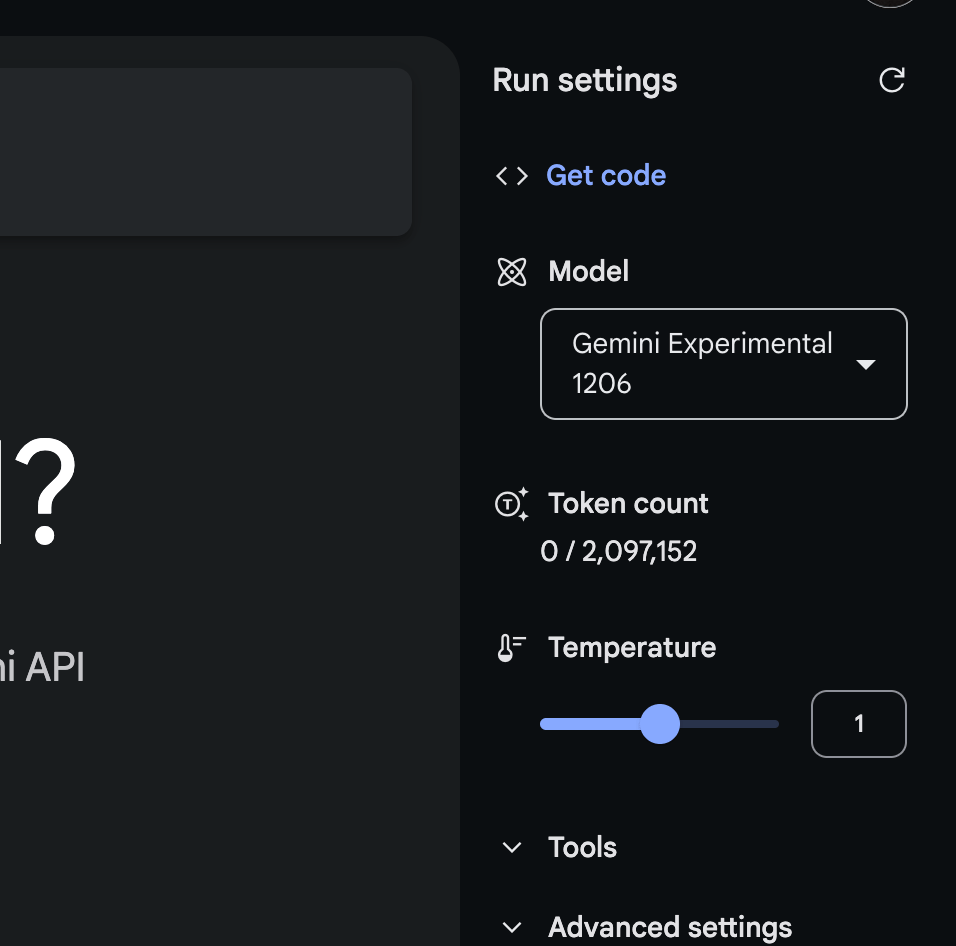

Make sure you have this model selected

You know the best part about this model?

See where it says “Token count”?

Yep, that’s a two million context window.

You might wonder, what am I even supposed to do with such a large context?

Well, here’s what I’ve been doing.

Feeding it dozens, and I mean like 30-40+ PDFs, and asking it for analysis/information.

There are two amazing things about this model:

The 2 million token context window

It’s (near) perfect retrieval

In my testing and building products with this model, I have found its retrieval to be nigh perfect. I mean, it’s going through 30+ PDFs and not only finding patterns perfectly, it is not making things up.

It quotes things correctly, it can easily read tables, graphs and diagrams and it can interpret most types of documents like PDFs and excel spreadsheets.

Even compared to Claude 3.5 Sonnet, which has been my daily driver for the last year, Google’s Gemini models are winning.

To me, especially for work, this model is an absolute beast.

If you have a lot of data, it is a no-brainer to use this model.

The best part?

It’s free.

On the aistudio.google.com website, you can try all the models for free.

If you work with a lot of data, I can’t recommend this workflow and model enough.

If you don’t want to (or do) pay for an AI tool, this is your best friend. Frankly speaking, for most people, no other tool is necessary.

Thinking models

You might remember that OpenAI’s o3 announcement showcased a new type of model use.

One where the model thinks and thinks and thinks before responding.

For OpenAI, it got them 88% on ARC-AGI which is very impressive.

But, you can’t exactly use the o3.

Don’t worry, Google’s got you covered there too.

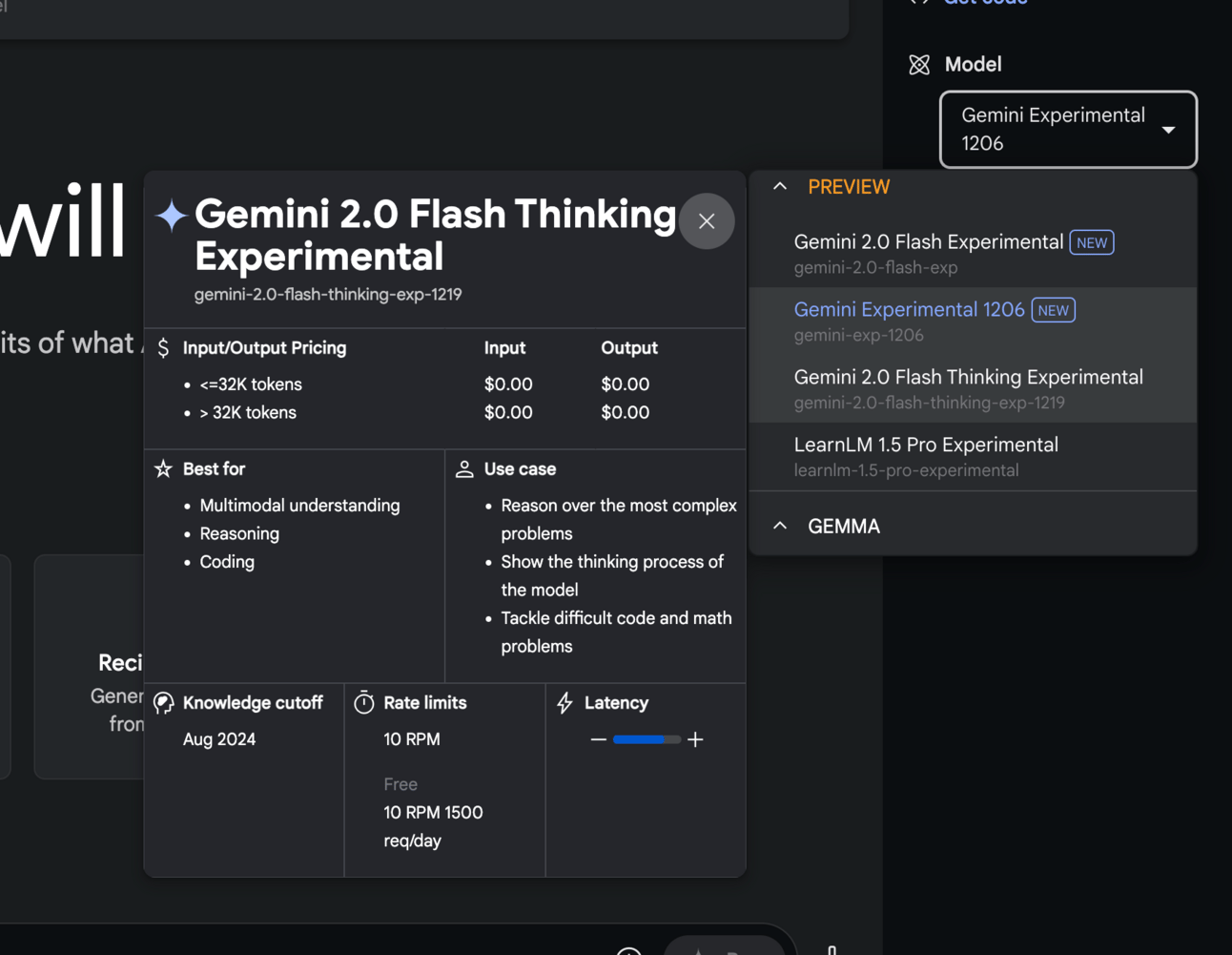

You can try the Gemini 2.0 Flash Thinking Experimental model.

This is supposed to be for reasoning over more complex problems.

If you do use it, let me know how you go! I’m very curious to hear how it went for you.

Chinese models aren’t stopping

Chinese AI lab, Hailuo has released a new AI text generation model called MiniMax-01.

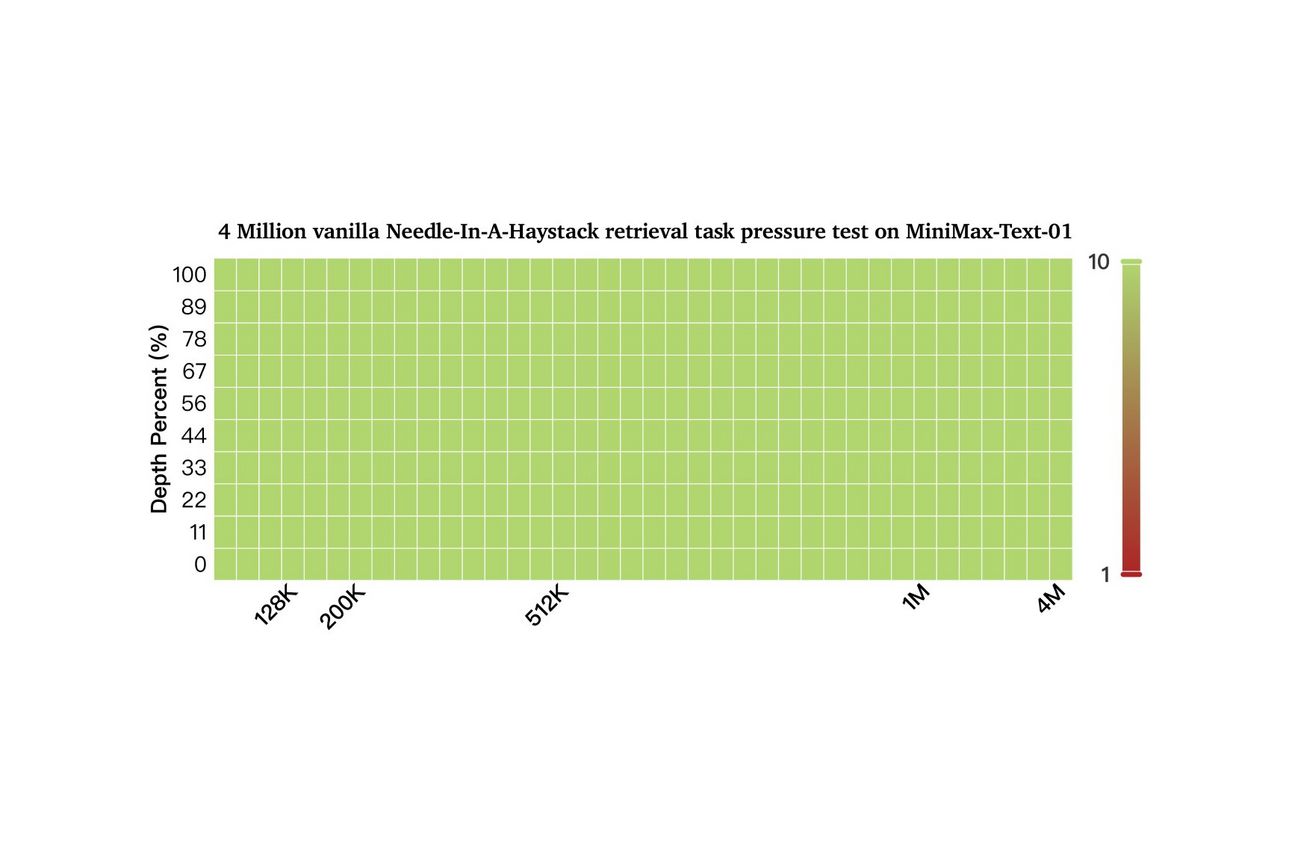

The model supports a whopping 4 Million token context window, which is 32x GPT-4o, 20x Claude 3.5 Sonnet and 2x Gemini.

What’s really impressive about this model is that it performed essentially perfectly in the needle-in-the-haystack test across such a large context window.

Now, I wouldn’t write about this without actually trying and testing it myself and to be honest, the model has been surprisingly good.

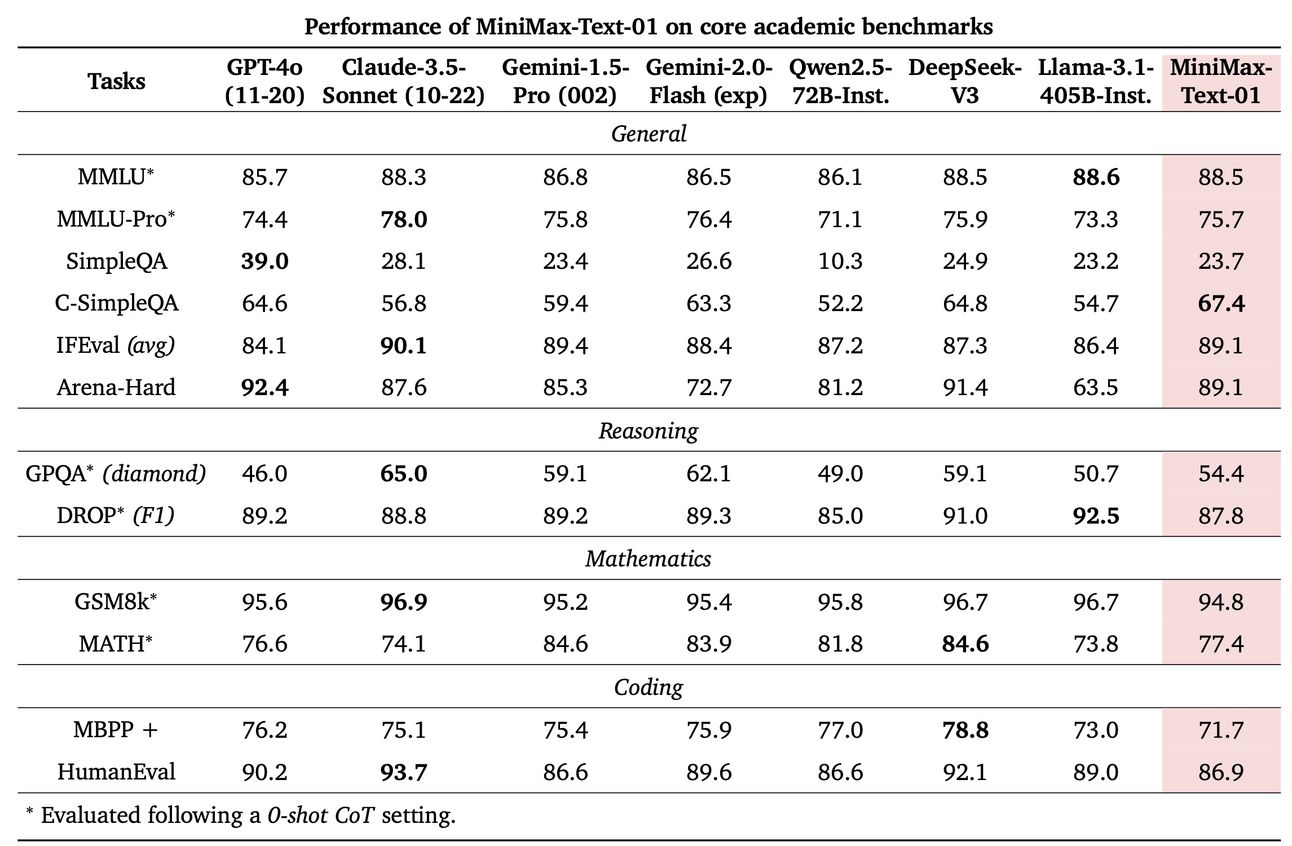

Usually, benchmarks don’t mean all that much, especially with this model looking very good on benchmarks.

The vibes are good.

To my surprise, at least when it comes to coding, the model does a very good job understanding context and reasoning.

What makes this so surprising is that this model does not use the same transformer architecture as most AI models these days.

Hailuo have used an innovative approach using something called Lightning Attention Architecture which allows them to not only serve a 4 Million context widow, but to serve the model for ridiculously cheap ($0.2/M input, $1.1/M output).

The model has 456B parameters which is going to be very important for the next point.

Project DIGITS

NVIDIA announced Project DIGITS recently as CES, which is essentially a mini AI computer for your home.

The little box comes with a GB10 Superchip, a 4TB SSD and 1 Petaflop of compute.

If these things mean nothing to you, then all you need to know is this.

With two of these, you could run the most powerful open source AI models privately in your home. That’s it. That’s all that matters.

Just imagine having the best open source models just sitting there running on your computer, powered by two little boxes.

There are open source models better than GPT-4o right now by the way.

This is a good sign that in future, it will be very, very easy to host and run powerful open source models locally, which is very exciting for the democratisation of AI.

Why this is so important

Take for example the results from a recent study on AI tutoring in Nigeria.

Across six weeks, students participated in after school AI tutoring and the results were incredibly promising.

Twice a week, students would use Copilot with the help of their teachers to learn grammar and writing.

Mind you, many of the students had never even used a computer before, so they spent the initial stages of the program learning how to navigate a PC, set up accounts and prompt the AI.

This just makes the learning curve even more impressive.

After six weeks, the students took a pen and paper test.

Here’s what the results showed:

Test scores improved .3 standard deviations, equivalent to 2 years of learning

Compared to other educational interventions, this program outperformed them by 80%

It’s not like the students did well on the tests at the end of the program and that’s it.

Students who participated in the pilot performed better on final exams months later, across subjects that weren’t even covered in the pilot.

Naturally, the pilot also showed that students who attended more AI sessions did better than those who didn’t.

Moreover, the program helped all students, not just the outliers - it particularly helped girls who were initially behind.

If the cost of running powerful open source models drops with devices like Project DIGITS, people all over the world, will only need electricity to have incredibly powerful tools at their disposal.

Numerous studies, like the one I’ve just mentioned have shown that AI can enhance learning at a rate we’ve never seen.

This is something that will (hopefully) change the world.

Don’t believe me?

Check out how Synthesis School uses AI to teach maths [Link]. Highly recommend watching this vid and seeing how AI can teach. Learning has never been easier.

What do you think?

Is AI Teaching/Tutoring a good thing? |

How was this edition? |

As always, Thanks for Reading ❤️

Written by a human named Nofil

Reply